Liquid AI, an AI startup spun out from MIT, has announced its first series of generative AI models, which it refers to as Liquid Foundation Models (LFMs).

“Our mission is to create best-in-class, intelligent, and efficient systems at every scale – systems designed to process large amounts of sequential multimodal data, to enable advanced reasoning, and to achieve reliable decision-making,” Liquid explained in a post.

According to Liquid, LFMs are “large neural networks built with computational units deeply rooted in the theory of dynamical systems, signal processing, and numerical linear algebra.” By comparison, LLMs are based on a transformer architecture, and by not using that architecture, LFMs are able to have a much smaller memory footprint than LLMs.

“This is particularly true for long inputs, where the KV cache in transformer-based LLMs grows linearly with sequence length. By efficiently compressing inputs, LFMs can process longer sequences on the same hardware,” Liquid wrote.

Liquid’s models are general-purpose and can be used to model any type of sequential data, like video, audio, text, time series, and signals.

According to the company, LFMs are good at general and expert knowledge, mathematics and logical reasoning, and efficient and effective long-context tasks.

The areas where they fall short today include zero-shot code tasks, precise numerical calculations, time-sensitive information, human preference optimization techniques, and “counting the r’s in the word ‘strawberry,’ ” the company said.

Currently, their main language is English, but they also have secondary multilingual capabilities in Spanish, French, German, Chinese, Arabic, Japanese, and Korean.

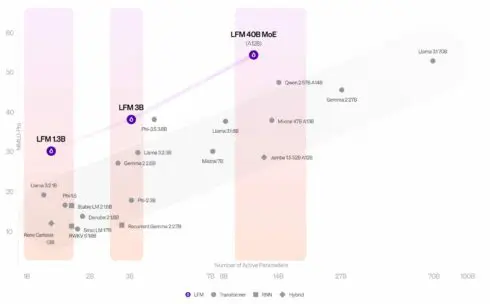

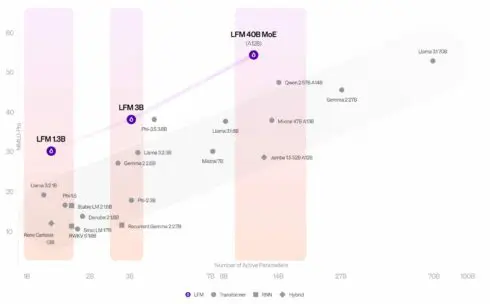

The first series of LFMs include three models:

- 1.3B model designed for resource-constrained environments

- 3.1B model ideal for edge deployments

- 40.3B Mixture of Experts (MoE) model optimal for more complex tasks

Liquid says it will be taking an open-science approach with its research, and will openly publish its findings and methods to help advance the AI field, but will not be open-sourcing the models themselves.

“This allows us to continue building on our progress and maintain our edge in the competitive AI landscape,” Liquid wrote.

According to Liquid, it is working to optimize its models for NVIDIA, AMD, Qualcomm, Cerebra, and Apple hardware.

Interested users can try out the LFMs now on Liquid Playground, Lambda (Chat UI and API), and Perplexity Labs. The company is also working to make them available on Cerebras Interface as well.