Tokenization is a critical yet often overlooked component of natural language processing (NLP). In this guide, we’ll explain tokenization, its use cases, pros and cons, and why it’s involved in almost every large language model (LLM).

Table of contents

What is tokenization in NLP?

Tokenization is an NLP method that converts text into numerical formats that machine learning (ML) models can use. When you send your prompt to an LLM such as Anthropic’s Claude, Google’s Gemini, or a member of OpenAI’s GPT series, the model does not directly read your text. These models can only take numbers as inputs, so the text must first be converted into a sequence of numbers using a tokenizer.

One way a tokenizer may tokenize text would be to split it into separate words and assign a number to each unique word:

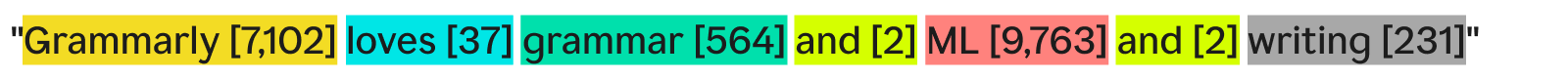

“Grammarly loves grammar and ML and writing” might become:

Each word (and its associated number) is a token. An ML model can use the sequence of tokens—[7,102], [37], [564], [2], [9,763], [2], [231]—to run its operations and produce its output. This output is usually a number, which is converted back into text using the reverse of this same tokenization process. In practice, this word-by-word tokenization is great as an example but is rarely used in industry for reasons we will see later.

One final thing to note is that tokenizers have vocabularies—the complete set of tokens they can handle. A tokenizer that knows basic English words but not company names may not have “Grammarly” as a token in its vocabulary, leading to tokenization failure.

Types of tokenization

In general, tokenization is turning a chunk of text into a sequence of numbers. Though it’s natural to think of tokenization at the word level, there are many other tokenization methods, one of which—subword tokenization—is the industry standard.

Word tokenization

Word tokenization is the example we saw before, where text is split by each word and by punctuation.

Word tokenization’s main benefit is that it’s easy to understand and visualize. However, it has a few shortcomings:

- Punctuation, if present, is attached to the words, as with “writing.”

- Novel or uncommon words (such as “Grammarly”) take up a whole token.

As a result, word tokenization can create vocabularies with hundreds of thousands of tokens. The problem with large vocabularies is they make training and inference much less efficient—the matrix needed to convert between text and numbers would need to be huge.

Additionally, there would be many infrequently used words, and the NLP models wouldn’t have enough relevant training data to return accurate responses for those infrequent words. If a new word was invented tomorrow, an LLM using word tokenization would need to be retrained to incorporate this word.

Subword tokenization

Subword tokenization splits text into chunks smaller than or equal to words. There is no fixed size for each token; each token (and its length) is determined by the training process. Subword tokenization is the industry standard for LLMs. Below is an example, with tokenization done by the GPT-4o tokenizer:

Here, the uncommon word “Grammarly” gets broken down into three tokens: “Gr,” “amm,” and “arly.” Meanwhile, the other words are common enough in text that they form their own tokens.

Subword tokenization allows for smaller vocabularies, meaning more efficient and cheaper training and inference. Subword tokenizers can also break down rare or novel words into combinations of smaller, existing tokens. For these reasons, many NLP models use subword tokenization.

Character tokenization

Character tokenization splits text into individual characters. Here’s how our example would look:

Every single unique character becomes its own token. This actually requires the smallest vocabulary since there are only 52 letters in the alphabet (uppercase and lowercase are regarded as different) and several punctuation marks. Since any English word must be formed from these characters, character tokenization can work with any new or rare word.

However, by standard LLM benchmarks, character tokenization doesn’t perform as well as subword tokenization in practice. The subword token “car” contains much more information than the character token “c,” so the attention mechanism in transformers has more information to run on.

Sentence tokenization

Sentence tokenization turns each sentence in the text into its own token. Our example would look like:

The benefit is that each token contains a ton of information. However, there are several drawbacks. There are infinite ways to combine words to write sentences. So, the vocabulary would need to be infinite as well.

Additionally, each sentence itself would be pretty rare since even minute differences (such as “as well” instead of “and”) would mean a different token despite having the same meaning. Training and inference would be a nightmare. Sentence tokenization is used in specialized use cases such as sentence sentiment analysis, but otherwise, it’s a rare sight.

Tokenization tradeoff: efficiency vs. performance

Choosing the right granularity of tokenization for a model is really a complex relationship between efficiency and performance. With very large tokens (e.g., at the sentence level), the vocabulary becomes massive. The model’s training efficiency drops because the matrix to hold all these tokens is huge. Performance plummets since there isn’t enough training data for all the unique tokens to meaningfully learn relationships.

On the other end, with small tokens, the vocabulary becomes small. Training becomes efficient, but performance may plummet since each token doesn’t contain enough information for the model to learn token-token relationships.

Subword tokenization is right in the middle. Each token has enough information for models to learn relationships, but the vocabulary is not so large that training becomes inefficient.

How tokenization works

Tokenization revolves around the training and use of tokenizers. Tokenizers convert text into tokens and tokens back into text. We’ll discuss subword tokenizers here since they are the most popular type.

Subword tokenizers must be trained to split text effectively.

Why is it that “Grammarly” gets split into “Gr,” “amm,” and “arly”? Couldn’t “Gram,” “mar,” and “ly” also work? To a human eye, it definitely could, but the tokenizer, which has presumably learned the most efficient representation, thinks differently. A common training algorithm (though not used in GPT-4o) employed to learn this representation is byte-pair encoding (BPE). We’ll explain BPE in the next section.

Tokenizer training

To train a good tokenizer, you need a massive corpus of text to train on. Running BPE on this corpus works as follows:

- Split all the text in the corpus into individual characters. Set these as the starting tokens in the vocabulary.

- Merge the two most frequently adjacent tokens from the text into one new token and add it to the vocabulary (without deleting the old tokens—this is important).

- Repeat this process until there are no remaining frequently occurring pairs of adjacent tokens, or the maximum vocabulary size has been reached.

As an example, assume that our entire training corpus consists of the text “abc abcd”:

- The text would be split into [“a”, “b”, “c”, “ ”, “a”, “b”, “c”, “d”]. Note that the fourth entry in that list is a space character. Our vocabulary would then be [“a”, “b”, “c”, “ ”, “d”].

- “a” and “b” most frequently occur next to each other in the text (tied with “b” and “c” but “a” and “b” win alphabetically). So, we combine them into one token, “ab”. The vocabulary now looks like [“a”, “b”, “c”, “ ”, “d”, “ab”], and the updated text (with the “ab” token merge applied) looks like [“ab”, “c”, “ ”, “ab”, “c”, “d”].

- Now, “ab” and “c” occur most frequently together in the text. We merge them into the token “abc”. The vocabulary then looks like [“a”, “b”, “c”, “ ”, “d”, “ab”, “abc”], and the updated text looks like [“abc”, “ ”, “abc”, “d”].

- We end the process here since each adjacent token pair now only occurs once. Merging tokens further would make the resulting model perform worse on other texts. In practice, the vocabulary size limit is the limiting factor.

With our new vocabulary set, we can map between text and tokens. Even text that we haven’t seen before, like “cab,” can be tokenized because we didn’t discard the single-character tokens. We can also return token numbers by simply seeing the position of the token within the vocabulary.

Good tokenizer training requires extremely high volumes of data and a lot of computing—more than most companies can afford. Companies get around this by skipping the training of their own tokenizer. Instead, they just use a pre-trained tokenizer (such as the GPT-4o tokenizer linked above) to save time and money with minimal, if any, loss in model performance.

Using the tokenizer

So, we have this subword tokenizer trained on a massive corpus using BPE. Now, how do we use it on a new piece of text?

We apply the merge rules we determined in the tokenizer training process. We first split the input text into characters. Then, we do token merges in the same order as in training.

To illustrate, we’ll use a slightly different input text of “dc abc”:

- We split it into characters [“d”, “c”, “ ”, “a”, “b”, “c”].

- The first merge we did in training was “ab” so we do that here: [“d”, “c”, “ ”, “ab”, “c”].

- The second merge we did was “abc” so we do that: [“d”, “c”, “ ”, “abc”].

- Those are the only merge rules we have, so we are done tokenizing, and we can return the token IDs.

If we have a bunch of token IDs and we want to convert this into text, we can simply look up each token ID in the list and return its associated text. LLMs do this to turn the embeddings (vectors of numbers that capture the meaning of tokens by looking at the surrounding tokens) they work with back into human-readable text.

Tokenization applications

Tokenization is always the first step in all NLP. Turning text into forms that ML models (and computers) can work with requires tokenization.

Tokenization in LLMs

Tokenization is usually the first and last part of every LLM call. The text is turned into tokens first, then the tokens are converted to embeddings to capture each token’s meaning and passed into the main parts of the model (the transformer blocks). After the transformer blocks run, the embeddings are converted back into tokens. Finally, the just-returned token is added to the input and passed back into the model, repeating the process again. LLMs use subword tokenization to balance performance and efficiency.

Tokenization in search engines

Search engines tokenize user queries to standardize them and to better understand user intent. Search engine tokenization might involve splitting text into words, removing filler words (such as “the” or “and”), turning uppercase into lowercase, and dealing with characters like hyphens. Subword tokenization usually isn’t necessary here since performance and efficiency are less dependent on vocabulary size.

Tokenization in machine translation

Machine translation tokenization is interesting since the input and output languages are different. As a result, there will be two tokenizers, one for each language. Subword tokenization usually works best since it balances the trade-off between model efficiency and model performance. But some languages, such as Chinese, don’t have a linguistic component smaller than a word. There, word tokenization is called for.

Benefits of tokenization

Tokenization is a must-have for any NLP model. Good tokenization lets ML models work efficiently with text and handle new words well.

Tokenization lets models work with text

Internally, ML models only work with numbers. The algorithm behind ML models relies entirely on computation, which itself requires numbers to compute. So, text must be turned into numbers before ML models can work with them. After tokenization, techniques like attention or embedding can be run on the numbers.

Tokenization generalizes to new and rare text

Or more accurately, good tokenization generalizes to new and rare text. With subword and character tokenization, new texts can be decomposed into sequences of existing tokens. So, pasting an article with gibberish words into ChatGPT won’t cause it to break (though it may not give a very coherent response either). Good generalization also allows models to learn relationships among rare words, based on the relationships in the subtokens.

Challenges of tokenization

Tokenization depends on the training corpus and the algorithm, so results can vary. This can affect LLMs’ reasoning abilities and their input and output length.

Tokenization affects reasoning abilities of LLMs

An easy problem that often stumps LLMs is counting the occurrences of the letter “r” in the word “strawberry.” The model would incorrectly say there were two, though the answer is really three. This error may partially have been because of tokenization. The subword tokenizer split “strawberry” into “st,” “raw,” and “berry.” So, the model may not have been able to connect the one “r” in the middle token to the two “r”s in the last token. The tokenization algorithm chosen directly affects how words get tokenized and how each token relates to the others.

Tokenization affects LLM input and output length

LLMs are mostly built on the transformer architecture, which relies on the attention mechanism to contextualize each token. However, as the number of tokens increases, the time needed for attention goes up quadratically. So, a text with four tokens will take 16 units of time but a text with eight tokens will take 64 units of time. This confines LLMs to input and output limits of a few hundred thousand tokens. With smaller tokens, this can really limit the amount of text you can feed into the model, reducing the number of tasks you can use it for.