Apple Vision Pro introduced new ways of controlling apps through gestures, but it appears that Apple wants to extend that to controlling any device it makes.

Before the Apple Vision Pro, if you gestured at a computer, it was to be rude. With the Apple Vision Pro, though, Apple introduced a whole collection of gestures from how to move windows and resize documents.

They are some of the finest elements of the visionOS in Apple Vision Pro, and it’s remarkable how complete they seem. This is a whole set of gestures where once you’ve been shown then, they all feel so natural that it’s impossible to imagine alternatives — or that they are so new.

A newly-revealed patent application suggests that Apple is pretty pleased with these gestures, too. For using them across more devices is the whole point of “Devices, Methods, And Graphical User Interfaces For Using A Cursor To Interact With Three-Dimensional Environments.”

It’s far from new. Apple even applied for a similar patent back in 2009, but this new filing is bolstered by the success of the gestures in the Apple Vision Pro.

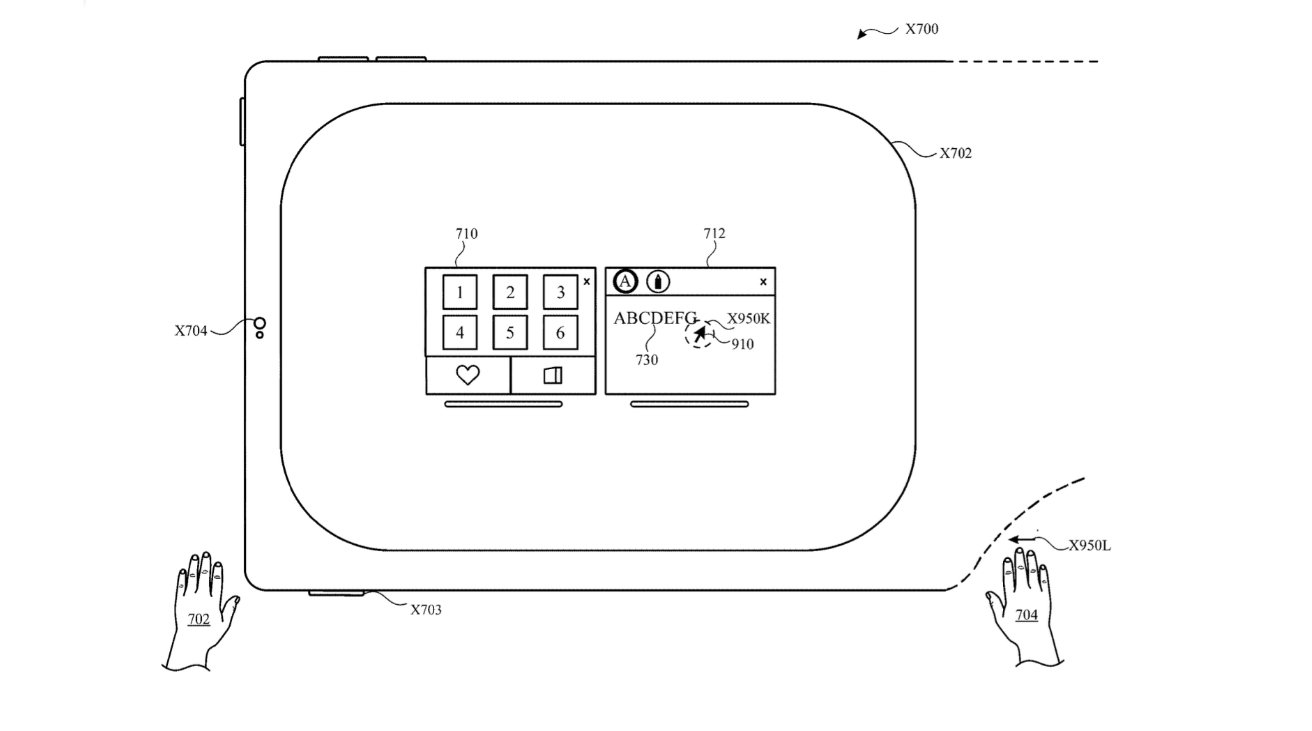

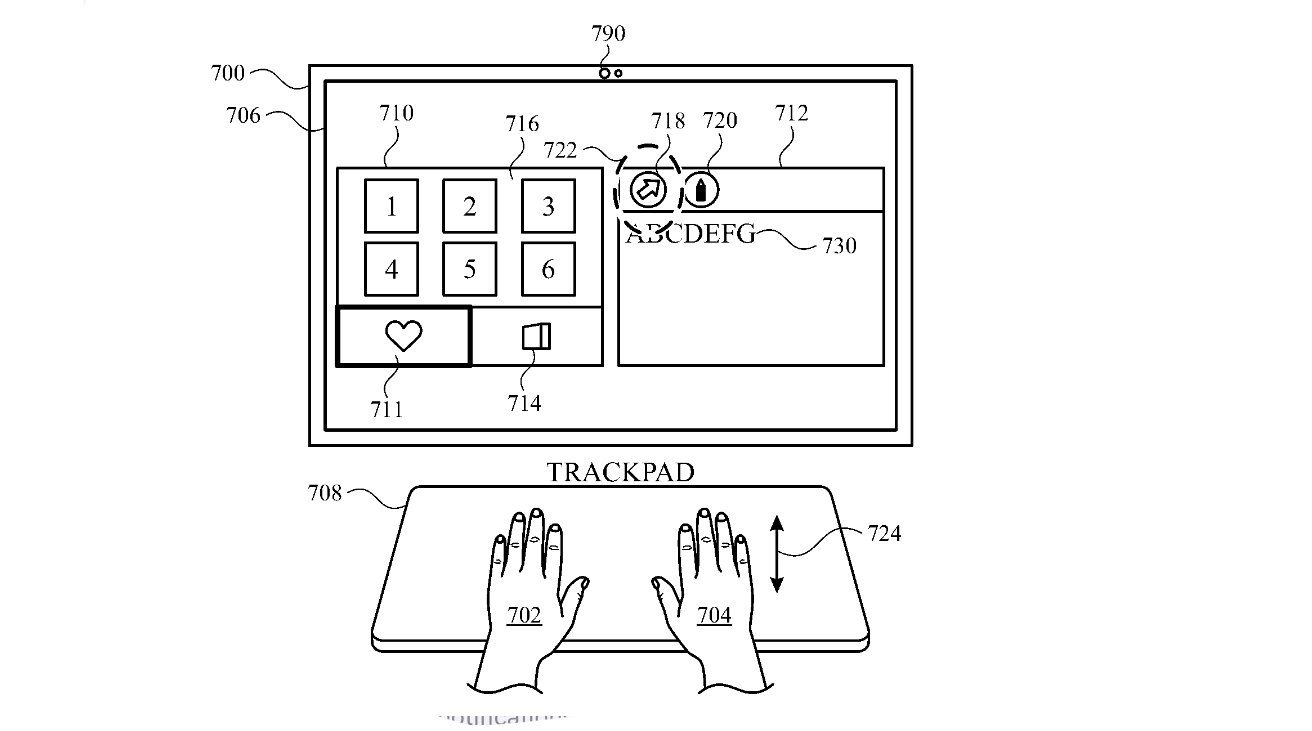

The patent application begins with illustrative drawings and diagrams, of which 36 are to do with the Apple Vision Pro. There are even component breakdowns of the headset, and there are definitely signs that Apple is thinking about how the Apple Vision Pro could see a user’s gestures and relay them to the Mac or other device being used.

But then there are stick figure drawings showing a user holding a regular iPad, or standing in front of a rather archaic-looking tower computer with a monitor. In neither case is the user wearing a headset.

Apple says this patent application is for devices “that provide computer-generated experiences, including, but not limited to, electronic devices that provide virtual reality and mixed reality experiences via a display.”

It makes sense that users shouldn’t have to have. Right now iPads and iPhones have Face ID sensors that scan the whole face, so it’s no leap to see them detecting a wave of the hand in front of them.

And Apple is already using the Face ID sensors for more than biometrics and unlocking devices. With iOS 18, these sensors are the way that users can entirely navigate through their iPhones just by looking at them.

What this new patent application concentrates on is the steps needed to correctly recognize a gesture, and also to reject false positives, like someone scratching their nose.

Where there is “a determination that the movement of the respective hand satisfies a first set of criteria,” the gesture can, for example, lead a device to the “changing a position of a cursor based on the movement of the respective hand.” If the same system determines that the gesture doesn’t meet a set of criteria, the system ignores it.

Since this is a patent application, that’s as far as it goes in describing its purpose, because patents are always low on use cases. This one is more repetitive than most, as it separately works through describing all of this determining of gestures, and the different results from cursor movement to moving windows.

But while the patent application does not come close to even hinting at this, there would be one obvious outcome of Apple implementing it. The company has always said it won’t put a touchscreen into a Mac because it’s bad for users ergonomically if they’re always reaching up to the screen.

With this idea, they wouldn’t have to touch the screen, they could just wave to tell a document where to go.

This patent application is credited to three inventors. They include Evgenii Krivoruchko, whose previous work for Apple includes patents for using users’ attention to control elements in a 3D environment.