By the time changes have made their way to the legacy database, then you could argue that it is too late for

event interception.

That said, “Pre-commit” triggers can be used to intercept a database write event and take different actions.

For example a row could be inserted into a separate Events table to be read/processed by a new component –

whilst proceeding with the write as before (or aborting it).

Note that significant care should be taken if you change the existing write behaviour as you may be breaking

a vital implicit contract.

Case Study: Incremental domain extraction

One of our teams was working for a client whose legacy system had stability issues and had become difficult to maintain and slow to update.

The organisation was looking to remedy this, and it had been decided that the most appropriate way forward for them was to displace the legacy system with capabilities realised by a Service Based Architecture.

The strategy that the team adopted was to use the Strangler Fig pattern and extract domains, one at a time, until there was little to none of the original application left.

Other considerations that were in play included:

- The need to continue to use the legacy system without interruption

- The need to continue to allow maintenance and enhancement to the legacy system (though minimising changes to domains being extracted was allowed)

- Changes to the legacy application were to be minimised – there was an acute shortage of retained knowledge of the legacy system

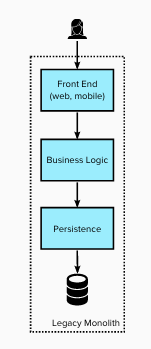

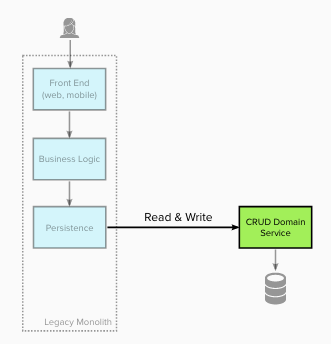

Legacy state

The diagram below shows the architecture of the legacy

architecture. The monolithic system’s

architecture was primarily Presentation-Domain-Data Layers.

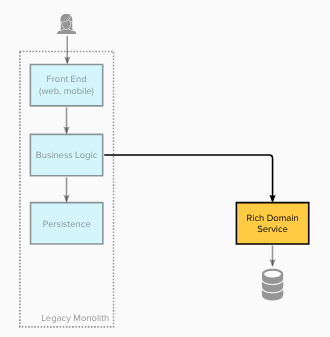

Stage 1 – Dark launch service(s) for a single domain

Firstly the team created a set of services for a single business domain along with the capability for the data

exposed by these services to stay in sync with the legacy system.

The services used Dark Launching – i.e. not used by any consumers, instead the services allowed the team to

validate that data migration and synchronisation achieved 100% parity with the legacy datastore.

Where there were issues with reconciliation checks, the team could reason about, and fix them ensuring

consistency was achieved – without business impact.

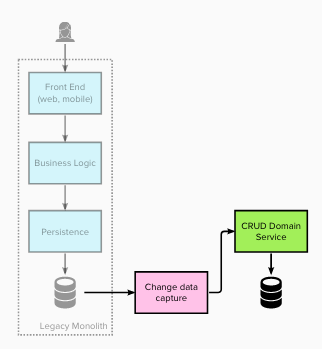

The migration of historical data was achieved through a “single shot” data migration process. Whilst not strictly Event Interception, the ongoing

synchronisation was achieved using a Change Data Capture (CDC) process.

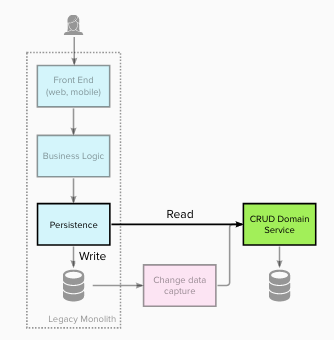

Stage 2 – Intercept all reads and redirect to the new service(s)

For stage 2 the team updated the legacy Persistence Layer to intercept and redirect all the read operations (for this domain) to

retrieve the data from the new domain service(s). Write operations still utilised the legacy data store. This is

and example of Branch by Abstraction – the interface of the Persistence Layer remains unchanged and a new underlying implementation

put in place.

Stage 3 – Intercept all writes and redirect to the new service(s)

At stage 3 a number of changes occurred. Write operations (for the domain) were intercepted and redirected to create/update/remove

data within the new domain service(s).

This change made the new domain service the System of Record for this data, as the legacy data store was no longer updated.

Any downstream usage of that data, such as reports, also had to be migrated to become part of or use the new

domain service.

Stage 4 – Migrate domain business rules / logic to the new service(s)

At stage 4 business logic was migrated into the new domain services (transforming them from anemic “data services”

into true business services). The front end remained unchanged, and was now using a legacy facade which

redirected implementation to the new domain service(s).